What Is Driving Algorithms Evolution?

Artificial Intelligence (AI) systems are about to do as much as humans for a large scale of activities, such as driving a car, answering customer requests, diagnosing diseases or assisting elder people. These AI systems are made of thousands of enmeshed, fast evolving computer algorithms. The rate of progress is so high that it raises many concerns about impacts on human societies. But what are the laws that drive this evolution?

In that paper we propose a provocative answer to that question: we suggest that the Darwinist laws that drive the biological and cultural evolution also drive the evolution of computer algorithms! The same mechanisms are at work to create complexity, from cells to humans, and from human to robots.

More precisely, we will focus on Universal Darwinism, a generalized version of the mechanisms of variation, selection and heredity proposed by Charles Darwin, already applied to explain evolution in a wide variety of other domains like anthropology, music, culture or cosmology.

According to that approach, many evolutionary processes can be decomposed in three components: 1/ Variation of a given form or template, typically by mutation or recombination; 2/ Selection of the fittest variants; 3/ Heredity or retention, meaning that the features of the fit variants are retained and passed on.

We will start by giving simple examples to illustrate how this mechanism fits well with the evolution of open source algorithms. That will lead us to consider the big digital platforms as organisms where algorithms are selected and transmitted.

These platforms have an important role in the emergence of Machine Learning techniques, and we will study that evolution from a Darwinist perspective in section 2.

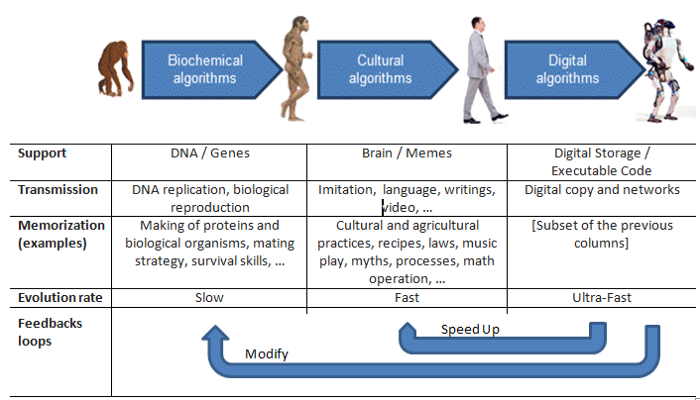

In the third section, we are going to extend our scope and make ours the vision that genes and some elements of culture can be seen as algorithms, and the competition for jobs between human and machines as a Darwinist process, driven by the move from human-centered algorithms to digital ones.

In the last section, we'll investigate in more details some of the feedback loops that, from our point of view, underpin the evolution of biological, cultural and numerical algorithms. These feedback loops help to understand the fantastic evolution rate of algorithms we see these days, and lead to troubling insights about transhumanism and potential consequences of AI in our societies.

1. Darwinist Evolution of Computer Algorithms

Let's start from an introductory example. Many researchers and companies are working to improve the Linux Kernel. Whatever they work on new concepts or slight algorithms improvement, the process from an improvement idea to the change introduced in a Kernel release is long, and many ideas or proposals may never make it through. But if this succeeds, then the change will be kept for a long time in the Linux code base, serve as a baseline for future work, and might be at some point deployed into millions of computers running Linux, from smartphones to supercomputers.

We see that the description of Universal Darwinism above fits nicely with our example: 1/ Variants of the numerous Linux algorithms are continuously created, typically by changing some part of an existing algorithm or combine different ones (for example algorithms developed in another context) 2/ The best variations that prove their benefit are selected and put in a Linux Kernel release 3/ The release is integrated in thousands of products and become the basis for new evolutions. Same as genes survive and continue to evolve after cells die, algorithms in your smartphone continue to evolve after you decide to change it to one with better algorithms (which has, for instance, new or improved features).

More generally, the open-source movement follows such evolutionary process. On Github for example the largest open-source development platform millions of projects exist and are continuously forked, mutated and recombined by millions of software developers who create elements of code, or, more commonly, reuse code or templates from other projects. Changes are selected before being introduced in the trunk, some piece of code becomes stable and reused in many projects or products, while most of them are forgotten. The analogy with genes evolution is sharp: simple organisms are also forked, their genes can be mutated or recombined, and sometime changes are kept in descendants.

The successful open-source code can be integrated and combined with non-open source ones in large platform that exports services. These cloud computing platforms such as the one owned by Amazon ( Amazon Web Services) or its competitors such as Microsoft, Google or Alibaba ease the composition of the numberless algorithms behind these services. They are used by these companies, but also by startups to scale up new ideas at low cost. Many startups are created and use these services. Few of them succeed and become able to create and put in open source their own variants or projects. These platforms act as catalysts: they enforce the Darwinist evolutionary process described above by speeding up the combination, selection and the retention of the algorithms.

2. Darwinist Evolution of AI Algorithms

The services provided by these platforms allow gathering a lot of data, which is also leading to a major breakthrough in algorithm evolution dynamic, because Machine Learning techniques allow to create algorithms from data. Historically, most algorithms were created meticulously by humans. In contrast, machine learning systems use categories of meta-algorithms (e.g., artificial neural networks) to create new algorithms. These algorithms can be called 'models' or 'agents'. They are typically created by being fed by very large data sets of examples ('big data') but not only.

Advances in algorithms to play Go game illustrate this evolution. During decades programmers manually developed and improved algorithms to evaluate Go positions and moves. Now, the best software use machine learning and Deep Neural Networks. These networks were trained at first to use a very large set of Go position in human plays. This technique allowed Google AlphaGo to win against human world champions. But recently, a new version called AlphaZero removed that constraint, and learned how to evaluate position just by playing against itself. The process can be seen as Darwinist: After each play the winner is forked and some changes are introduced. The new versions play against each other and the winners (fittest) are selected, forked, mutated, etc. Just by changing the initial rules, AlphaZero also learned in a few hours to become a Chess grandmaster, illustrating the genericity of the process, and the speed of change.

At lower levels, there is a similar mechanism called Reinforcement Learning. It is one of the key methods that drives the explosion of AI that we are currently seeing. It is used for example for automatic translation, virtual assistants or autonomous vehicles. The goal is to optimize a sequence of action to maximize a goal (for example in the Go game, a sequence of placement of stones to maximize the surround of opponent territory). In that algorithm an agent (i.e. an algorithm) selects an action on the basis of its past experiences. It receives a numerical reward, which encodes the success of its outcome, and seeks to learn to select actions that maximize the reward i.e. the fittest ones. Over time the agents tend to learn how to maximize the accumulated reward. To improve the process, a large number of randomly programed neural networks can be tested in parallel. The best ones are selected and copied with slight random mutations, and so on for several generations. This approach is called " neuroevolutive" and based on so-called "genetic algorithms", illustrating the similarity with biological evolution. The fitness function, which rewards progress towards objectives, can effectively be replaced with a novelty metric, which rewards behaving differently from prior individuals. Researchers working on such promising AI techniques, like Ken Stanley, are explicitly inspired by natural evolution's open-ended propensity to perpetually discover novelty.

3. Darwinism and the Digitalization of Human Algorithms

There is a lot of discussion these days about the impacts of AI on human activities, and there is a consensus that robots and virtual agents powered by AI algorithms could replace human in many activities. We know that humans can do such activities thanks to thousands of years of Darwinist evolution that developed its cognitive capabilities. Consequently, it's logical to think human cognitive development also in terms of algorithms, and to consider the subtle links between these different forms of algorithms from a Darwinian Perspective. For example, digital algorithms like the ones for computer vision are comparable and potentially exchangeable with biological functions, supported by genes. This analogy led robotic expert Gill Pratt to ask the question, " Is a Cambrian Explosion Coming for Robotics?", because the 'invention' of vision at the Cambrian was the keystone for the explosion of forms of life, and something similar might appear if vision is given to computers. Machines could for example learn how physical world works by "seeing" thousands of videos, like a child learn gravity and inertia conservation by observing the world. That's an active and very promising research topic for AI researchers.

The exchangeability of algorithms between human and machines is also discussed by Yuval Noah Harari in its bestseller " Homo Deus: A Brief History of Tomorrow". One of the keystone concepts he notably introduces is the biochemical algorithms used by organisms to "calculate" what is usually considered as "feeling" or "emotions" such as the way to make the best decision to avoid a predator or to select a partner for successful matting. Although it is not explicitly mentioned in the book, these algorithms follow a Darwinist process: They are evolved and improved through millions of years of evolution, and if the feelings of some ancient ancestor made a mistake, the genes shaping these feelings did not pass to the next generation. Harari argues that digital algorithms and biochemical algorithms have the same nature, and that the former are likely to become more effective for most tasks than the later.

Behavior or practices spreading from person to person within a culture are also considered by philosopher Daniel Dennett and some researchers like Richard Dawkins who coined the term meme as following the Universal Darwinism processes. Typical example includes cooking, agriculture, religious or war practices, but enterprise processes and know-how also fit in that category. The point is that most memes are algorithms cooking recipes are indeed a common example to explain what an algorithm is to newcomers. It seems thus possible to consider the digital transformation of processes and competences in enterprises as a move from human-centered algorithms to digital ones. That move is initially made by humans who developed manually more and more automated business processes. But now they are complemented by machine learning to either increase the level of automation (for example by leveraging AI algorithms that understand natural language or optimize complex activities) or enhances the processes by analyzing the ever-growing data flow. Enterprises have no choices but to adapt. As the world is becoming more and more digitally disrupted, Charles Darwin famously said "it is not the strongest of the species that survives, nor the most intelligent; it is the one most adaptable to change" is truer than ever.

This digital transformation also leads to a competition between humans and machines, as it is explained in Erik Brynjolfsson's and Andrew McAfee's book " Race Against The Machine". It's indeed a Darwinist competition between forms of algorithms. For instance, the machine learning based algorithms from Amazon are now challenging the ones acquired by booksellers to advise a customer. Amazon also develops AI to control datacenters, warehouses, business processes and logistic systems requiring continuously decreasing human intervention. The algorithms behind all this are continuously improved by developers, and many of them are not even employed by Amazon thanks to the Open Source communities. They are also continuously improved by using the huge amount of generated data acquired by the platform and Machine Learning progress. Humans can hardly compete.

4. Closing the Loop and Autocatalysis of Algorithms

We have seen that digital algorithms tend to replace or complement biochemical and cultural algorithms (including business processes), and that there are positive feedback loops between both. There are other feedback loops worth mentioning.

For example, the diffusion of knowledge is accelerated by information technologies like Internet, smartphones, on-line courses, search engines, question-answering systems, etc. But these information technologies are backed by many digital algorithms. This means that digital algorithms accelerate the rate of diffusion of cultural algorithms (for example computer science training), which in turn can accelerate the development of digital algorithms.

Another example, quite different, is in recent progress in DNA manipulation, like CRSIPR/Cas9 techniques. This progress is the consequence of digital algorithmic advances in DNA sequencing and classification. But DNA is the physical support of algorithms to create proteins. As a consequence, digital algorithmic are able to change genetic algorithms. This consequence could be tremendous in the future, because we replace the old way of gene evolution through hazardous mutation with one controlled by digital algorithms. These changes are already applied on animals to treat some diseases. This gives some weight to the opinion of transhumanist which is: these techniques could also be used to extend human biological capabilities.

The following diagram illustrates these relationships:

Platforms are enablers of these positive feedback loops, because they act as catalysts: by reducing distance and mismatch between data and algorithms, they increase the rate of reaction. In current corporate platforms, algorithms help developers to develop algorithms and help machine learning specialist to transform data into algorithms. These algorithms are then used in application and services that in turn contribute to acquiring more data and gain money to invest in new versions, etc. The largest AI companies like Google, Facebook or Baidu are struggling to create ecosystems of developers that can create and develop new ideas of algorithms, new variants, combine them, etc. That's probably the main reason why they put on open-source their sophisticated Deep Learning frameworks: to ease the developers' life by using the same technology, to share, modify or combine code. These AI companies also provide the means to host the outcomes on their platforms, thus increasing the retention in their ecosystem.

In a near future, these cloud platforms will enable the sharing of knowledge between robots. As Gill Pratt pointed out, once an algorithm is learned by a machine (for example a self-driving car that encounters an unusual situation), it could be replicated to other machines via digital networks. Data as well as skills can be shared, increasing the amount of data that any given machine learner can use. This in turn increases the rate of improvement.

More generally, progress in automated machine leaning allows algorithms to create new algorithms with no or little human assistance. The trend is that reactions between algorithms in platforms become more and more autocatalytic. According to some scientists like Stuart Kauffman, autocatalysis plays a major role in origin of life, and more generally in the emergence of self-organized systems. Our feeling is that autocatalysis in algorithms is the major breakthrough in AI, which explains the best the exponential progress that we are seeing.

All developed countries are now aware of that breakthrough. They are struggling to develop and control AI algorithms, because they are becoming an essential part of their sovereignty and independence. The control of the innovation process and platforms where algorithms are capitalized becomes of key importance. The China and US governments for example and especially military and intelligence-related agencies like DARPA invest massively in R&D around data processing and AI, often co-funded by the large commercial platform providers. The outcome is notably a flow of new ideas and algorithms, and the fittest could be at some point incorporated into these platforms and could also be used to increase nations soft and hard power (like AI based weapons, intelligence systems,...) and increase their resilience. We see here a clear Universal Darwinist process, which comes with risks as was pointed out by Elon Musk, who in reaction funded OpenAI whose goal is to "counteract large corporations who may gain too much power by owning super-intelligence systems devoted to profits, as well as governments which may use AI to gain power and even oppress their citizenry". The first project is an open source platform to help in developing collaboratively reinforcement learning algorithms that will remain patent-free. Such a platform is indeed the best way to avoid the retention of algorithms in some 'organism' like governments and a few large companies. We will see if such initiatives succeed, but it cannot be understood (from our point of view) without understanding the dynamic of algorithm evolution.

5. Conclusion

The way algorithms evolve is an important topic to understand the evolution of our civilization. As we look at the past this evolution seems unpredictable. For example, nobody 20, 10 or even 5 years ago was able to predict the evolution of AI algorithms or the growth of Facebook. However that evolution is not totally by chance, and is partly the result of millions of rational decisions taken by a myriad of actors like software engineers, consumers, business corporations and government agencies.

In this article we investigated the idea that this evolution of algorithms has similarities with the biological and cultural evolution that drove to breakthroughs in the past, like the Cambrian explosion 500 million years ago or the invention of agriculture 12,000 years ago. It appears that the same principles of Universal Darwinism can lead to useful insights on how algorithms evolve over time in many contexts ranging from open source software and communities to platforms, from the digital transformation of our society to the progress of AI systems and impact on society. We could find other examples that fit well with these principles, in domains such as cybersecurity (co-evolution of malware and their countermeasure), unsupervised machine learning (like the promising Generative Adversarial Networks) or automated A/B Testing.

This contribution does not pretend to be a scientific work, or to conclude anything about the relevance of Darwinism in other contexts. But it provides an insight about the evolution of algorithms, independently of their support (genes, culture, or digital). We are convinced that thinking about algorithms as a whole is necessary for a better understanding of the digital disruption we are living in, to figure out what could be the next stages, and to mitigate possible threats for our democracies.

Looking forward, our feeling is that a general theory of the algorithms evolution is needed, which could be part of broader theories dealing with the self-creation of order in nature.

PS1: The views expressed in this paper are those of the author, and not necessarily to the author's employer.

PS2: We will continue in other stories to investigate relationship between information, algorithms and complexity. Stay tuned!