Xavier Azalbert, France-Soir

Modern Uses of Frameworks in Information Management: Redefining Truth and Decision-Making

Abstract: The third in a series of four, this article dissects how modern frameworks, these tools of organization and influence, manipulate information to shape truths and decisions. Historically anchored, they are now boosted by GAFAM algorithms, which orchestrate psychological capture via " community rules " and emotion loops, stifling critical thinking.These systems favor group conformity, relegating truth to biased consensus, like in a digital Truman Show. The section on psychological capture exposes how collective truths crush individual thinking, engendering a social apathy where error is neither recognized nor a source of learning, but masked by an illusory transparency.

This dynamic, nourished by a culture of efficiency and a management allergic to failure, paralyzes creativity and diversity of thought. Faced with this conforming machine, a leap forward is necessary: to rehabilitate critical thinking, to dare to say "no", and to cultivate a slow reflection to escape the prison of negotiated truths.

Article

This article is the third part of a series dedicated to frameworks, the methodological frameworks that structure our societies, our thoughts and our decisions. In the first article, we traced their history, from Sun Tzu's strategies to digital tools such as MITRE ATT&CK, to Jules Ferry's educational reforms. The second explored the tension between human uniqueness – its disorder, its multidisciplinarity, its "madness" – and the "normatization" imposed by these frameworks, which threaten our creativity and our non-linearity.

This third part looks at the modern uses of frameworks in information management, where systematic and probabilistic approaches are redefining our relationship to truth and decision-making. In a data-saturated world, frameworks like DISARM, designed to counter disinformation, shape official narratives and "group truths," often at the expense of authentic knowledge. By exploiting cognitive biases, these tools, far from liberating, act as "barbed wire" or "electronic waves", capturing minds in a normative hatch. The VUCA, BANI, and RUPT models reveal a world that is too complex for traditional decision-making schemes, while information pollution and incomplete information aggravate biases.

Through examples such as the vote on compulsory vaccination in July 2021, where false assertions biased decisions, and based on France-Soir ( L'information, c'est le pouvoir au peuple, VUCA, BANI et bannis, debriefings on Peter McCullough and Olivier Frot doctor of law), we will see how these executives, supported by actors such as McKinsey, standardize information, often against the public interest. The next article will explore how to resist these frameworks to preserve freedom, truth, and dissent.

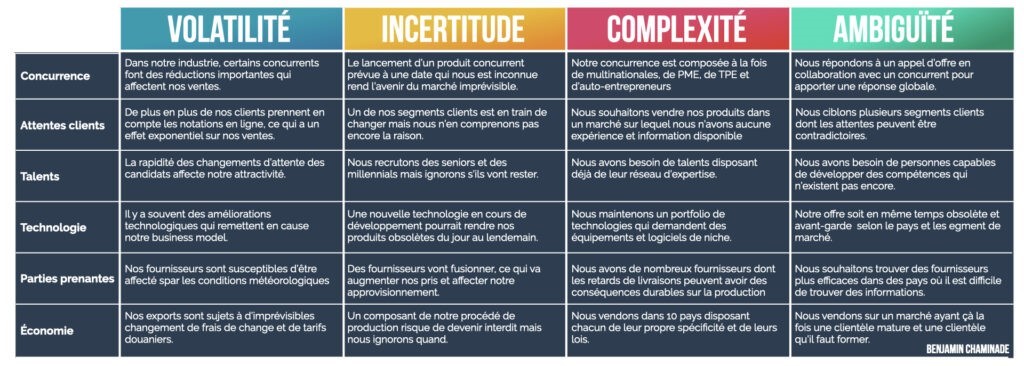

A changing world: VUCA, BANI, RUPT and the obsolescence of traditional decision-making processes

Imagine a raging ocean, where waves of information crash relentlessly: social networks, non-stop news channels, algorithms that amplify noise, polarize minds, and drown out the truth. We live in an era where information, once scarce, has become a relentless storm, driven by rapid crises – pandemics, geopolitical conflicts, climate change. This increasing complexity makes traditional decision-making schemes – plan, forecast, execute – as obsolete as a compass in a magnetic storm. As highlighted in the article " VUCA, BANI and Banished by the Method: Assault and Battery", the VUCA, BANI, and RUPT models attempt to map this chaos, revealing not only the inadequacy of linear approaches, but also the challenges of manipulated information.

- VUCA, born in the 1980s at the US Army War College, describes a world marked by Volatility (abrupt changes, such as stock market crashes), Uncertainty (unpredictability of crises), Complexity (global interconnections, such as supply chains), and Ambiguity (lack of clarity, as in health narratives). Used by companies to navigate digital disruption or the 2008 financial crisis, VUCA has shown its limits in the face of deeper upheavals, where information itself becomes a weapon. For example, during the covid-19 pandemic, governments used VUCA to plan lockdowns, but conflicting narratives about masks or vaccines revealed its inability to deal with misinformation.

- BANI, proposed by Jamais Cascio, goes further, capturing the essence of a digital world: Brittle (fragile systems, such as cybernetic infrastructures or a world much more uncertain than previously thought), Anxious (anxiety in the face of uncertainty, such as during lockdowns), Non-linear (unpredictable events, such as cyberattacks), Incomprehensible (opaque truths, such as vaccine contracts). This framework, more suited to the age of algorithms, reflects the anxiety of a society overwhelmed by conflicting narratives. In 2020, BANI could have guided the management of vaccination campaigns, but the official narratives, amplified by executives such as DISARM, have made it possible to stifle scientific debates, reinforcing opacity. France-Soir criticizes these rigid frameworks, which ignore the non-linearity essential to truth, frame and even prevent creativity or uniqueness, locking people into a group truth.

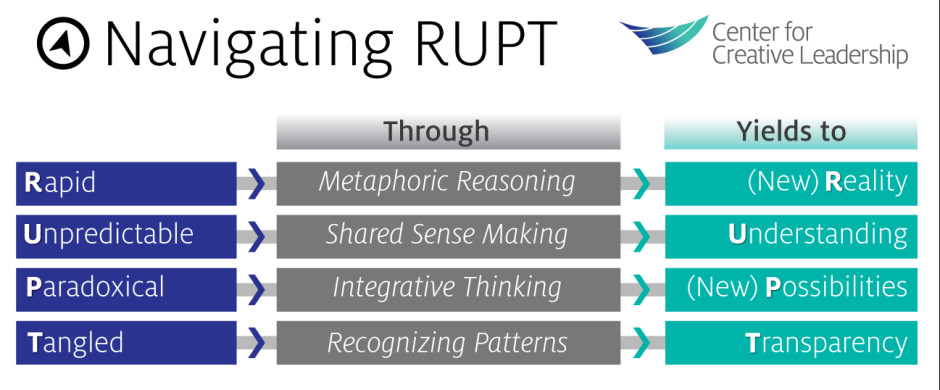

- RUPT, with its Fast (lightning crises, such as viral scandals), Unpredictable (unforeseen events, such as cyberattacks), Paradoxical (counterintuitive solutions), and Tangled (entangled systems, such as social networks) dimensions, emphasizes speed and interconnection. Used in strategic foresight, RUPT has helped anticipate crises such as the tensions in Ukraine (2022), but it fails to counter disinformation and its excesses, where standardised narratives dominate facts. For example, reports on Ukrainian biological laboratories, accused of being "conspiracy theorists", have been marginalised by executives such as DISARM, as well as the origin of the virus and the effectiveness of early treatments against covid.

These models show that the world has changed: linear approaches, inherited from the industrial age, are collapsing into non-linear chaos. However, instead of promoting true knowledge, frameworks, and charters of ethics that are supposed to assist in managing information, impose prefabricated truths, exploiting our disarray in the face of uncertainty.

Like a wave that sweeps everything away, this informational chaos calls for new tools, but these tools, far from guiding, risk chaining us, transforming information into a tool of control rather than a power to the people.

DISARM: A Framework for Shaping Group Truths

In this ocean of information, DISARM (Disinformation Analysis and Risk Management) stood like a beacon, promising to guide through the storm of disinformation. Introduced in the previous article as a normative matrix, DISARM is a methodological framework designed to identify, analyze, and neutralize disinformation campaigns. But this beacon casts a disturbing shadow: far from protecting the truth, DISARM becomes a tool of control, weaving group truths that stifle authentic knowledge, as denounced by France-Soir in " L'information, c'est le pouvoir au peuple". To understand this ambivalence, let's dive into what DISARM is, how it works, who uses it, and how it redefines our relationship to truth.

What is it

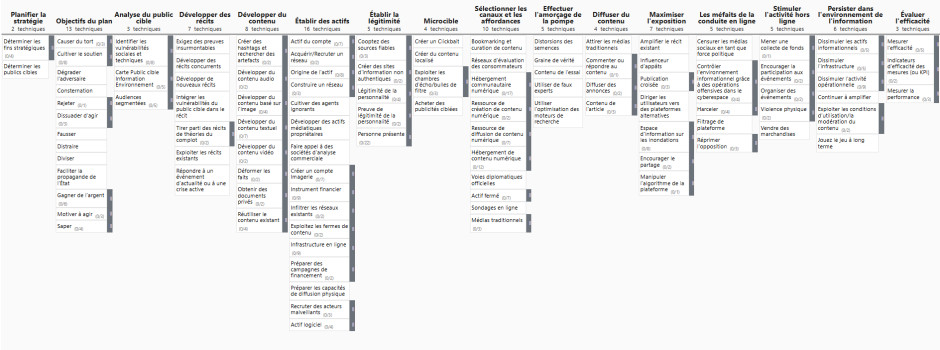

DISARM is an open-source framework, developed from 2019 by experts in cybersecurity and information analytics, notably through the Cognitive Security Institute and collaborations with NGOs such as the US Department of State's Global Engagement Center. Inspired by frameworks like AMITT (Adversarial Misinformation and Influence Tactics and Techniques), DISARM aims to map the tactics, techniques, and procedures (TTPs) of disinformation campaigns, whether they are fake news, propaganda, or polarizing narratives. [ADD] Its structure is based on a detailed taxonomy, divided into three pillars: actors (who spreads disinformation?), tactics (how? e.g., amplification, fake accounts), and content (what? e.g., narratives, memes). This systematic approach, inherited from cybersecurity frameworks like MITRE ATT&CK, aims to make disinformation predictable and neutralizable. DISARM is intended to be a universal tool, adaptable to various contexts, from elections to health crises.

How it works

DISARM operates in three stages: identification, analysis, and neutralization.

- Identification : Algorithms scan social media, media, and digital platforms for signals of misinformation, such as spikes in viral content or polarizing keywords (e.g., "dangerous vaccine"). These probabilistic algorithms rely on Big Data to detect anomalies, such as coordinated campaigns.

- Analysis : Signals are classified according to the DISARM taxonomy. For example, a meme criticizing vaccines could be labeled as "polarization tactics" or "defiance content." Analysts, often helped by AI, assess the potential impact (e.g., virality, targeted audience).

- Neutralization : measures are taken, such as censorship (removal of content), deprioritization (reduction of visibility), or counter-propaganda (amplification of official narratives). These actions are based on partnerships with GAFAM, which adjust their algorithms to limit the scope of targeted content.

This systematic approach structures information into clear categories, while the probabilistic aspect predicts risks via statistical models. For example, DISARM can estimate the likelihood that an anti-vaccine narrative will go viral, by analyzing historical data and online behaviors. These models, although sophisticated, reduce human complexity to probabilities, ignoring the non-linearity of ideas, celebrated in the previous article.

Who uses it

DISARM is adopted by a range of actors with diverse motivations:

- Governments : Security agencies (e.g., FBI, MI6) use it to counter foreign campaigns, such as alleged Russian interference in elections.

- NGOs and think tanks: groups such as the Atlantic Council or the Global Disinformation Index use them to monitor polarizing narratives.

- Tech companies : GAFAM (Meta, Google, Twitter) integrate DISARM into their moderation policies, often under regulatory pressure.

- Media and news agencies : some collaborate with DISARM to validate information, reinforcing official narratives. These actors, although heterogeneous, share one objective: to control information to maintain social order or protect interests (national security, profits, reputation). However, their motivations diverge: governments seek stability, techs maximize engagement, and NGOs pursue ideological agendas. This convergence of interests creates an ecosystem where DISARM becomes a tool of power, often at the expense of marginal voices.

How is it deployed?

DISARM is deployed in a variety of contexts, but its impact is particularly visible in health and political crises. Let's take the vote on compulsory vaccination in France, in July 2021. On July 12, President Macron announced measures based on scientific assertions – vaccine efficacy, collective protection – dismantled by Professor Peter McCullough in a debriefing by FranceSoir ( Analysis of Emmanuel Macron's scientific assertions). DISARM has been used to amplify the official narrative: GAFAM algorithms, aligned with this framework, have deprioritized McCullough's videos, while fact-checkers, often linked to DISARM's partner NGOs, have labeled its analyses as " partially false ". Counter-propaganda campaigns, such as pro-vaccine ads, have saturated the networks, exploiting availability bias to entrench vaccine "safety."

This strategy marginalized legitimate critics, accused of disinformation, creating a group truth that influenced parliamentarians. Accusatory inversions – accusing dissidents of manipulation – and paradoxical injunctions – demanding trust without transparency – have reinforced this control. For example, vaccine skeptics have been labeled " public health threats," a DISARM tactic that polarizes the debate beyond the interests of patients. In 2022, narratives about the conflict in Ukraine, such as reports on biological labs, suffered a similar fate: DISARM helped disqualify them as " Russian propaganda," despite open questions.

DISARM, by exploiting cognitive biases such as availability (repetition of official narratives) or confirmation bias (adherence to consensus), acts as "barbed wire", enclosing information to impose an artificial order. Its effectiveness is based on its ability to coordinate actors – governments, tech, media – in an ecosystem where information is filtered before it even reaches the public.

But this coordination, while it protects against certain manipulations, stifles dissension, which is essential to the truth, as the previous article points out. DISARM, far from being a neutral tool, redefines truth as a product of power, not as a collective quest.

Information pollution and cognitive biases: biased decisions

If DISARM is a net, information pollution is the current that carries truths and lies in the same whirlwind. This pollution – information overload, intentional misinformation, conflicting narratives – blurs our compass, turning information into a maze where cognitive biases become traps. As France-Soir writes, information should be a power to the people, but it becomes a weapon when executives, like DISARM, exploit our psychological flaws to bias our decisions.

Cognitive biases are mental shortcuts, reflexes that help us navigate uncertainty, but that betray us under pressure. Confirmation bias leads us to believe what supports our ideas: in 2021, many bought into vaccine narratives, ignoring warnings from scientists like McCullough. Availability bias overstates what is repeated: messages about the "necessity" of vaccines, hammered home by the media, have overshadowed debates about their side effects. The anchoring effect makes us give weight to an initial piece of information, even if false: Macron's assertions on July 12, 2021, served as a starting point for the vote on compulsory vaccination, despite their fragility. The bias of authority amplified this error: parliamentarians, influenced by official experts, followed Macron without demanding vaccine contracts. Framing bias, where presentation shapes perception, has anchored the idea that vaccines are "the only solution", obscuring early treatments. The overconfidence bias, where decision-makers overestimate their knowledge, has led to the neglect of emerging data, such as those on side effects.

GAFAM's algorithms aggravate this pollution. By creating filter bubbles, they lock individuals into echoes of their beliefs, reinforcing confirmation bias. In 2021, McCullough's critical videos were removed or delisted on YouTube, while official narratives dominated. These algorithms, probabilistic, amplify polarizing content to maximize engagement, such as anti-vaccine or pro-vaccine posts, fracturing the debate. In 2022, narratives about Ukraine – biological laboratories, origins of the conflict – were filtered by these same algorithms, limiting perspectives.

These technologies, often aligned with DISARM, turn information into a battlefield, where truth is a collateral victim.

This pollution is amplified in a context of information in an incomplete situation. In July 2021, parliamentarians voted without access to vaccine contracts, as Olivier Frot points out in FranceSoir (A contract so favourable to the industrialist, it seems abnormal to me). These opaque contracts protected the industrialists, not the national interest. DISARM, by structuring information via algorithms, amplified the official narrative, marginalizing critics. This opacity has systemic consequences: the loss of public trust, such as the demonstrations against the health pass, shows a rejection of imposed narratives. Accusatory inversions – accusing skeptics of conspiracy theories – and paradoxical injunctions – demanding trust without transparency – have stifled the debate, reinforcing biases. The 2021 vote, biased by these mechanisms, served industrial agendas, not public health.

Information pollution, orchestrated by executives, is not an accident: it is a strategy of alienation.

The norming or "normatization" of information: a consensus in the service of power

Information, in an ideal world, is a mosaic of perspectives, a kaleidoscope where each shard reflects a part of truth. But modern frameworks, such as DISARM, transform this mosaic into a uniform mirror, reflecting a single image: that of consensus. The "normatization" of information, orchestrated by news agencies, algorithms, and digital frameworks, creates a dominant narrative that pollutes minds. This consensus, often artificial, serves power – governments, industries, elites – to the detriment of the people.

News agencies, such as AFP or Reuters, are at the heart of this process. Their dispatches, picked up by thousands of media outlets, broadcast a standardized narrative, from geopolitical conflicts to health crises. In 2021, messages about vaccine efficacy, relayed without nuance, overshadowed critical analyses, such as those of McCullough. This mechanism is based on curation algorithms, which prioritize agency dispatches in social media and search engine feeds. For example, Google News, by highlighting "reliable" sources such as AFP, marginalizes independent media that report alternative perspectives. These algorithms, which are probabilistic, align with frameworks such as DISARM, strengthening consensus.

Fact-checkers aggravate this "normatization". Presented as guardians of the truth, they are often funded by tech companies (e.g. Meta) or NGOs linked to DISARM, such as the Poynter Institute. In 2021, hasty fact-checks disqualified theories on the side effects of vaccines, ignoring emerging data reported by FranceSoir. These tools, by labeling criticisms as "false", act as relays of DISARM, standardizing information. Their ambiguity – truth or censorship? – reflects the danger of normative frameworks, which privilege order over plurality.

Economic interests underlie this "normatization". The pharmaceutical industry, for example, has benefited from vaccine narratives, "protected by opaque contracts", as Olivier Frot notes. The GAFAMs, by aligning their algorithms with DISARM, maximize their profits by avoiding regulations. These actors, in collaboration with news agencies, are creating an ecosystem where information serves economic power, not public health. In 2022, the standardised coverage of the conflict in Ukraine – downplaying certain issues, such as biological laboratories – protected geopolitical interests, marginalising debates.

DISARM reinforces this consensus: by targeting " disinformation ", it censors dissenting voices, imposing a group truth. The vote on compulsory vaccination illustrates this drift: parliamentarians, drowning in a standardised narrative and deprived of contracts, followed a consensus that ignored the national interest. This consensus divides as much as it unifies: debates on vaccines, polarized between "pro" and "anti", have fractured societies, stifling nuance. Non-linearity, a source of creativity (Article 2), is crushed by this linear logic, where a single truth prevails. Frames, like electronic waves, capture minds, transforming information into a tool for societal alignment, not a quest for knowledge.

McKinsey: frameworks at the service of "normatization"

In the shadow of official narratives, McKinsey & Company weaves frameworks that structure information with surgical precision. Since 1926, McKinsey has perfected the art of frameworks, from Budgetary Control (1922), which harmonized financial decisions, to the General Survey Outline (1931), which standardized organizational analysis.

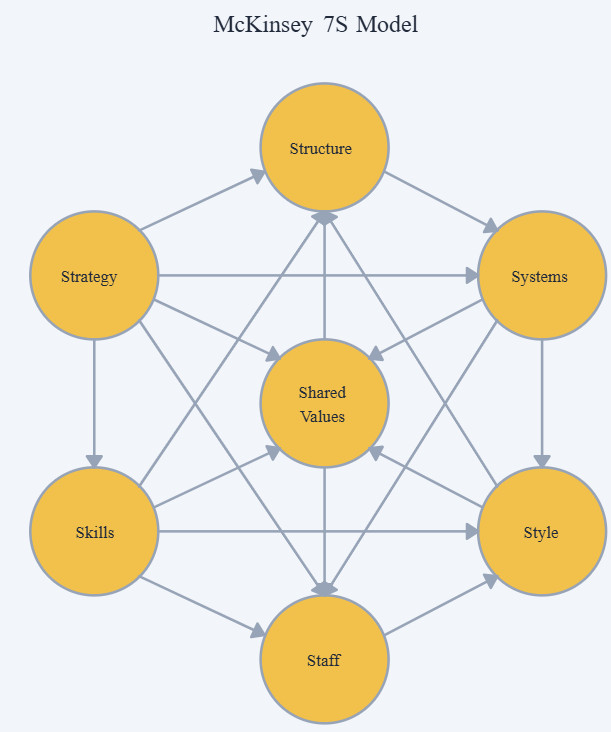

In the 1970s, frameworks such as the GE-McKinsey Matrix (strategic segmentation), the Minto Pyramid Principle (persuasive communication), and the 7S Framework (strategic organizational alignment) incorporated principles of persuasion, similar to marketing models (AIDA) or call center scripts.

These tools, by simplifying information, have shaped client strategies, but also standardised narratives.

During the COVID-19 crisis, McKinsey played a controversial role, advising governments on the management of vaccine campaigns. In France, its recommendations, based on frameworks such as the Minto Pyramid Principle, have structured official communication: clear messages (" vaccines save lives "), selective arguments, and marginalization of critics. This advice, billed at a high price, amplified the standardised narrative, such as the one supported by DISARM, but aroused criticism, particularly on conflicts of interest with the pharmaceutical industry.

France-Soir has pointed out these excesses, where the public interest takes precedence over profits.

McKinsey, like DISARM, illustrates the ambivalence of executives: tools of efficiency, but also invisible chains. By simplifying complexity, they standardize information, marginalizing alternative truths. For example, McKinsey has advised tech companies on content moderation, strengthening alignment with frameworks like DISARM. These partnerships, by structuring narratives, serve powerful agendas, to the detriment of plurality.

McKinsey's frameworks, by weaving smooth narratives, contribute to the normative blossoming, where truth becomes a product of power, not a collective quest.

Psychological Capture: Group Truths vs. Critical Thinking

If frameworks are barbed wire, the human mind is their prisoner. Overwhelmed by information pollution, the population finds itself trapped in a normative hatch, where group truths replace critical thinking. Like a wave that crashes endlessly, official narratives, amplified by cognitive biases and perverse mechanisms, keep individuals in a state of conformism, far from the emancipation promised by information. FranceSoir, in L'information, c'est le pouvoir au peuple, reminds us of this ideal: information should elevate, not chain.

Yet modern frameworks do the opposite, turning information into a tool of alienation.

Psychological capture operates through subtle but powerful mechanisms. The conformity bias, studied by Solomon Asch, shows how individuals adopt the majority opinion out of fear of rejection. In 2021, citizens, bombarded by narratives about the "necessity" of vaccines, often followed the consensus, even in the face of conflicting data, such as McCullough's. Fear, amplified by anxiety-provoking messages – health threat, social exclusion via the health pass – has pushed obedience. Paradoxical injunctions, such as requiring trust in opaque contracts, have sown confusion, discouraging questioning.

Stigmatization has muzzled dissidents. Calling someone a "conspiracy theorist" has become a weapon: in 2021, skeptical scientists, citizens, and even parliamentarians have been marginalized, their doubts swept away as threats. This dynamic has intensified with social networks, where algorithms amplify accusations of conspiracy theories, creating smear campaigns. For example, accounts sharing McCullough's analyses have been flagged or banned, reinforcing conformity. This stigmatization, orchestrated by executives like DISARM, divides society, turning the debate into a war of camps.

In the long run, this capture has devastating effects: social apathy, where individuals give up public debate, and the erosion of intellectual curiosity, where the search for truth gives way to passive acceptance. Since 2021, the rise of censorship, perceived as "normal" in some democracies, illustrates this apathy. The demonstrations against the health pass, although numerous, have lost strength in the face of collective fatigue. This loss of curiosity, where citizens stop questioning narratives, is a triumph for normative frameworks, which thrive on indifference.

Parliamentarians, during the July 2021 vote, embody this capture: influenced by a standardised narrative, deprived of complete information, they gave in to conformism, betraying the national interest, as France-Soir notes. Frames, like electronic waves, manipulate biases – conformity, availability, authority – to impose prefabricated truths. Information, instead of elevating, becomes a tool of control, a hatching where non-linearity and dissension (Article 2) are stifled.

It is not information that liberates, but a matrix that imprisons, where the quest for knowledge is replaced by blind adherence.

This psychological capture, amplified by group truths, engenders a deleterious social apathy, where the individual, overwhelmed by conformity, renounces questioning or acknowledging his mistakes. Far from promoting liberating transparency, this dynamic feeds a paralyzing confusion, where collective biases, reinforced by the GAFAM algorithms and their "community rules, favor immediate emotion harming slow thinking. These algorithms, designed to capture attention, encourage impulsive reactions rather than critical thinking, trapping individuals in a reassuring, yet biased, echo similar to the illusion of The Truman Show. Saying "NO" becomes an act of courage because critical thinking, which learns from its mistakes and frees itself from the herd, is supplanted by emotional ease and the fear o" "what will people s"y".

This conformity, often masked under a veneer of rationality, limits the individual by reducing him to a sterile norm, stifling any possibility of divergence.

This apathy is not only the result of oppressive social norms, but also of an ecosystem that punishes error and values efficiency at the expense of imagination. Management that is intolerant of failure, cultures obsessed with performance and the standardization of ideas crush the diversity of ways of thinking, which is essential to creativity. As the article points out, creativity is not preserved by declarations of principle: it requires a conscious effort, cultivated in the details of everyday life, to encourage experimentation and learning by mistakes. Conversely, forgetting these principles, exacerbated by technological and social systems that favor conformity, locks the individual in an invisible prison where truth becomes a negotiated commodity, not a quest.

Refusing this drift requires rehabilitating critical thinking, not as a simple tool, but as an act of resistance in the face of collective paralysis.

Coming out of the hatch to find the truth

Modern frameworks promise to guide through the chaotic ocean of information. But, like barbed wire, they capture minds, imposing group truths that skew decisions and stifle knowledge. Decision models revealing a world that is too complex for linear patterns are an open door to perverse exploitation and divert executives like DISARM, supported by the advice of actors like McKinsey, from their original intent to exploit cognitive biases – conformity, availability, authority, framing – to standardize information. The vote on compulsory vaccination in July 2021, based on false assertions and incomplete data, illustrates this drift: parliamentarians, captured by an official narrative, gave in to conformism, betraying the public interest. Information should give power to the people and not a weapon to the powerful.

Yet, in this chaos, there is hope. Dissension, critical thinking, and non-linearity are flames that can pierce the normative hatch. By defying fear, rejecting stigma, questioning imposed truths, we can restore the power of information to elevate. The next article will explore how to resist these frames, cultivating the freedom and creativity to break up the barbed wire.

Until then, let's remember: humans are not machines, nor are they cogs in a matrix. They are waves, a magnificent chaos, and it is in this disorder that they can find the truth.